MAGICAL, Part 1

"What's that for?" I asked. The question referred to a large screen in front of me, with my newly-captured photograph in the bottom corner. Where was the camera? In the picture, I was trying to spot it.

"What's that for?" I asked. The question referred to a large screen in front of me, with my newly-captured photograph in the bottom corner. Where was the camera? In the picture, I was trying to spot it.

The British Airways gate attendant at Chicago's O'Hare airport tapped the screen and a big green checkmark appeared.

"Customs." That was all the explanation she offered. It had all happened so fast there was no opportunity to object.

Behind me was an unforgiving line of people waiting to board. Was this a good time to stop to ask:

- What is the specific purpose of collecting my image?

- What legal basis do you have for collecting it?

- Who will be storing the data?

- How long will they keep it?

- Who will they share it with?

- Who is the vendor that makes this system and what are its capabilities?

It was not.

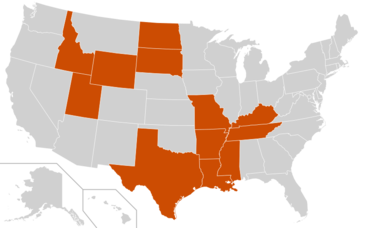

I boarded, tamely, rather than argue with a gate attendant who certainly didn't make the decision to install the system and was unlikely to know much about its details. Plus, we were in the US, where the principles of the data protection law don't really apply - and even if they did, they wouldn't apply at the border - even, it appears, in Illinois, the only US state to have a biometric privacy law.

I *did* know that US Customs and Border Patrol had begun trialing facial recognition in selected airports, beginning in 2017. Long-time readers may remember a net.wars report from the 2013 Biometrics Conference about the MAGICAL [sic] airport, circa 2020, through which passengers flow unimpeded because their face unlocks all. Unless, of course, they're "bad people" who need to be kept out.

I think I even knew - because of Edward Hasbrouck's indefatagable reporting on travel privacy - that at various airports airlines are experimenting with biometric boarding. This process does away entirely with boarding cards; the airline captures biometrics at check-in and uses them to entirely automate the "boarding process" (a favorite bit of airline-speak of the late comedian George Carlin). The linked explanation claims this will be faster because you can have four! automated lanes instead of one human-operated lane. (Presumably then the four lanes merge into a giant pile-up in the single-lane jetway.)

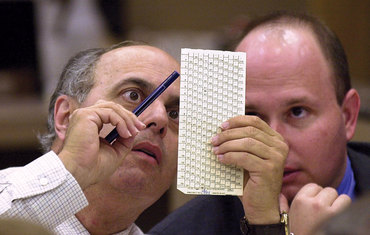

It was nonetheless startling to be confronted with it in person - and with no warning. CBP proposed taking non-US citizens' images in 2020, when none of us were flying, and Hasbrouck wrote earlier this year about the system's use in Seattle. There was, he complained, no signage to explain the system despite the legal requirement to do so, and the airport's website incorrectly claimed that Congress mandated capturing biometrics to identify all arriving and departing international travelers.

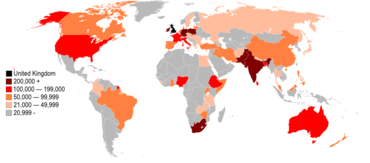

According to Biometric Update, as of last February, 32 airports were using facial recognition on departure, and 199 airports were using facial recognition on arrival. In total, 48 million people had their biometrics taken and processed in this way in fiscal 2021. Since the program began in 2018, the number of alleged impostors caught: 46.

"Protecting our nation, one face at a time," CBP calls it.

On its website, British Airways says passengers always have the ability to opt out except where biometrics are required by law. As noted, it all happened too fast. I saw no indication on the ground that opting out was possible, even though notice is required under the Paperwork Reduction Act (1980).

As Hasbrouck says, though, travelers, especially international travelers and even more so international travelers outside their home countries, go through so many procedures at airports that they have little way to know which are required by law and which are optional, and arguing may get you grounded.

He also warns that the system I encountered is only the beginning. "There is an explicit intention worldwide that's already decided that this is the new normal, All new airports will be designed and built with facial recognition built into them for all airlines. It means that those who opt out will find it more and more difficult and more and more delaying."

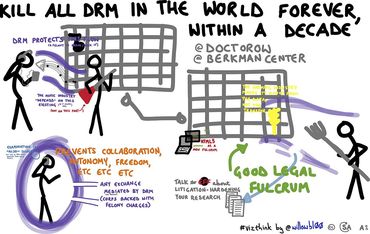

Hasbrouck, who is probably the world's leading expert on travel privacy, sees this development as dangerous. Largely, he says, it's happening unopposed because the government's desire for increased surveillance serves the airlines' own desire to cut costs through automating their business processes - which include herding travelers onto planes.

"The integration of government and business is the under-noticed aspect of this. US airports are public entities but operate with the thinking of for-profit entities - state power merged with the profit motive. State *monopoly* power merged with the profit motive. Automation is the really problematic piece of this. Once the infrastructure is built it's hard for airline to decide to do the right thing." That would be the "right thing" in the sense of resisting the trend toward "pre-crime" prediction.

"The airline has an interest in implying to you that it's required by government because it pressures people into a business process automation that the airline wants to save them money and implicitly put the blame on the government for that," he says. "They don't want to say 'we're forcing you into this privacy-invasive surveillance technology'."

Illustrations: Edward Hasbrouck in 2017.

Wendy M. Grossman is the 2013 winner of the Enigma Award. Her Web site has an extensive archive of her books, articles, and music, and an archive of earlier columns in this series. Stories about the border wars between cyberspace and real life are posted occasionally during the week at the net.wars Pinboard - or follow on Twitter.

In the spring of 2020, as country after country instituted lockdowns, mandated

In the spring of 2020, as country after country instituted lockdowns, mandated  They got what they wanted, and now they're screwing it up.

They got what they wanted, and now they're screwing it up.

All national constitutions are written to a threat model that is clearly visible if you compare what they say to how they are put into practice. Ireland, for example, has the same right to freedom of religion embedded in its constitution as the US bill of rights does. Both were reactions to English abuse, yet they chose different remedies. The nascent US's threat model was a power-abusing king, and that focus coupled freedom of religion with a bar on the establishment of a state religion. Although the Founding Fathers were themselves Protestants and likely imagined a US filled with people in their likeness, their threat model was not other beliefs or non-belief but the creation of a supreme superpower derived from merging state and church. In Ireland, for decades, "freedom of religion" meant "freedom to be Catholic". Campaigners for the separation of church and state in 1980s Ireland, when I lived there, advocated fortifying the constitutional guarantee with laws that would make it true in practice for everyone from atheists to evangelical Christians.

All national constitutions are written to a threat model that is clearly visible if you compare what they say to how they are put into practice. Ireland, for example, has the same right to freedom of religion embedded in its constitution as the US bill of rights does. Both were reactions to English abuse, yet they chose different remedies. The nascent US's threat model was a power-abusing king, and that focus coupled freedom of religion with a bar on the establishment of a state religion. Although the Founding Fathers were themselves Protestants and likely imagined a US filled with people in their likeness, their threat model was not other beliefs or non-belief but the creation of a supreme superpower derived from merging state and church. In Ireland, for decades, "freedom of religion" meant "freedom to be Catholic". Campaigners for the separation of church and state in 1980s Ireland, when I lived there, advocated fortifying the constitutional guarantee with laws that would make it true in practice for everyone from atheists to evangelical Christians. An unexpected bonus of the gradual-then-sudden disappearance of Boris Johnson's government, followed by

An unexpected bonus of the gradual-then-sudden disappearance of Boris Johnson's government, followed by  What if,

What if,

It's only an accident of covid that this year's

It's only an accident of covid that this year's

Last week, we learned that back last October

Last week, we learned that back last October

-thumb-230x353-1093.jpg)

In the last few weeks, unlike any other period in the 965 (!) previous weeks of net.wars columns: there were *five* pieces of (relatively) good news in the (relatively) restricted domain of computers, freedom, and privacy.

In the last few weeks, unlike any other period in the 965 (!) previous weeks of net.wars columns: there were *five* pieces of (relatively) good news in the (relatively) restricted domain of computers, freedom, and privacy. -thumb-370x246-964.jpg)

-thumb-200x300-915.jpg)

"My iPhone won't stab me in my bed,"

"My iPhone won't stab me in my bed,"

To these known evils, the German Pirate Party MEP

To these known evils, the German Pirate Party MEP

_in_Luxembourg_with_flags-thumb-370x277-576.jpg)

-thumb-370x246-758.jpg) It is just over a year since

It is just over a year since -thumb-370x246-756.jpg)

After months of anxiety among digital rights campaigners such as the

After months of anxiety among digital rights campaigners such as the

In his draft paper, Swire favors allowing greater surveillance of non-citizens than citizens. While some countries - he cited the US and Germany - provide greater protection from surveillance to their own citizens than to foreigners, there is little discussion about why that's justified. In the US, he traces the distinction to Watergate, when Nixon's henchmen were caught unacceptably snooping on the opposition political party. "We should have very strong protections in a democracy against surveilling the political opposition and against surveilling the free press." But granting everyone else the same protection, he said, is unsustainble politically and incorrect as a matter of law and philosophy.

In his draft paper, Swire favors allowing greater surveillance of non-citizens than citizens. While some countries - he cited the US and Germany - provide greater protection from surveillance to their own citizens than to foreigners, there is little discussion about why that's justified. In the US, he traces the distinction to Watergate, when Nixon's henchmen were caught unacceptably snooping on the opposition political party. "We should have very strong protections in a democracy against surveilling the political opposition and against surveilling the free press." But granting everyone else the same protection, he said, is unsustainble politically and incorrect as a matter of law and philosophy.

"Regulatory oversight is going to be inevitable,"

"Regulatory oversight is going to be inevitable,"  "We were kids working on the new stuff," said Kevin Werbach. "Now it's 20 years later and it still feels like that."

"We were kids working on the new stuff," said Kevin Werbach. "Now it's 20 years later and it still feels like that." Both

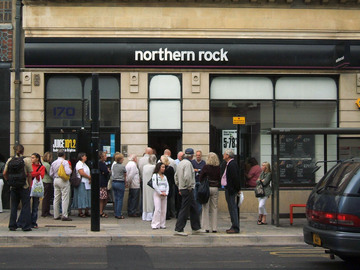

Both  The financial revolution due to hit Britain in mid-January has had surprisingly little publicity and has little to do with the money-related things making news headlines over the last few years. In other words, it's not a new technology, not even a cryptocurrency. Instead, this revolution is regulatory: banks will be required to open up access to their accounts to third parties.

The financial revolution due to hit Britain in mid-January has had surprisingly little publicity and has little to do with the money-related things making news headlines over the last few years. In other words, it's not a new technology, not even a cryptocurrency. Instead, this revolution is regulatory: banks will be required to open up access to their accounts to third parties. As anyone attending the annual

As anyone attending the annual  Would you rather be killed by a human or a machine?

Would you rather be killed by a human or a machine?

.svg-thumb-360x139-212.png) There's

There's  There are many reasons why, Bryan Schatz finds at Mother Jones,

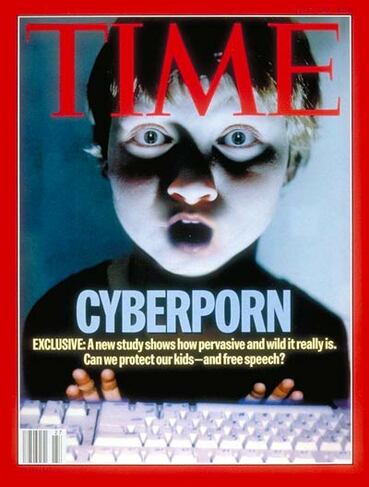

There are many reasons why, Bryan Schatz finds at Mother Jones,  The second issue touches directly on privacy. Soon after the news of the Las Vegas shooting broke, a friend posted a link to the 2016 GQ article

The second issue touches directly on privacy. Soon after the news of the Las Vegas shooting broke, a friend posted a link to the 2016 GQ article

-thumb-220x247-646.jpg)