Re-accommodating...

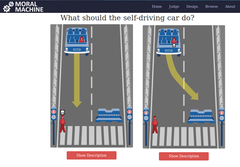

Last year, some folks at MIT implemented a version of the trolley problem they called the Moral Machine, a series of scenarios in which you choose which people and/or pets a self-driving car should sacrifice in a fatal accident. I chose to save the old people at the expense of the children and animals, on the basis that experience and knowledge are societally expensive to replace.

Last year, some folks at MIT implemented a version of the trolley problem they called the Moral Machine, a series of scenarios in which you choose which people and/or pets a self-driving car should sacrifice in a fatal accident. I chose to save the old people at the expense of the children and animals, on the basis that experience and knowledge are societally expensive to replace.

The problem with this experiment is its unreality. Neither humans nor machines make decisions this way. For humans, instincts of pure self-preservation kick in. Mostly, we just try to stop, like the people in the accelerating Toyotas (whose best strategy would appear to have been to turn the car off entirely), but the obvious response is to try to save themselves and their passengers while trying not to hit anyone else. There is never going to be enough time or cognitive space to count the pets or establish that the potential victim on the sidewalk is a Nobel prize-winning physicist or debate whether the fact that he's already done his significant work means it would be better to hit him to spare the baby to the left.

The problem with this experiment is its unreality. Neither humans nor machines make decisions this way. For humans, instincts of pure self-preservation kick in. Mostly, we just try to stop, like the people in the accelerating Toyotas (whose best strategy would appear to have been to turn the car off entirely), but the obvious response is to try to save themselves and their passengers while trying not to hit anyone else. There is never going to be enough time or cognitive space to count the pets or establish that the potential victim on the sidewalk is a Nobel prize-winning physicist or debate whether the fact that he's already done his significant work means it would be better to hit him to spare the baby to the left.

Given the small amounts of time at its disposal in a crunch, I'm willing to bet that the software driving autonomous vehicles won't make these calculations either. Certainly not today, when we're still struggling to distinguish between a truck and a cloud. Ultimately, algorithms for making such choices will start with simple rules that then get patched by special interests to become a monster like our tax system. The values behind the rules will be crucial, which is the point of MIT's experiment.

The simplest possible setting is: kill the fewest people. So, now, do you want to buy a self-driving car that may turncoat to kill your child in a crisis, for the statistically greater good? These values will be determined by car and software manufacturers, and given their generally risk-averse lawyers it's more likely the vehicle will, as in Dexter Palmer's Version Control, try to hand off both the wheel and the liability to the human driver, who will then become, as Madeleine Elish said at We Robot 2016 the human-machine system's moral crumple zone.

There are already situations where exactly this kind of optimization takes place and this week we saw one of them at play in the case of the passenger dragged bleeding and probably concussed off United Airlines flight 3411 (operated by Republic Airline). Farhad Manjoo, writing in the New York Times, argues that the airlines' road to the bottom of customer service has been technology-fueled. Of course. The quality of customer service is not easily quantified for either airline or customer. But seat prices, the cost of food and beverages, traveler numbers, staffing levels, allowed working hours - all these are numbers that can be fed into an algorithm with the operational instruction, "Optimize for profits". Airline travel today is highly hierarchical; every passenger's financial value to the airline can be precisely calculated.

MIT's group focused on things like jobs (physicist, criminal), success (Nobel prize!), age, and apparent vulnerability (toddler, disabled...). A different group might calculate social value, asking how many people would be hurt - and how much - by your death? That approach might save the parents of young children and kill anti-social nerds and old people whose friends are all dead. Neither approach is is how today's algorithms value people, because they are predominantly owned by businesses, as Frank Pasquale has written in his book Black Box Society.

It is the job of the programming humans to apply the ethical brakes. But, as has been pointed out, for example by University of Maryland professor Danielle Citron in her 1998 paper Technological Due Process, programmers are bad at that, and on-the-ground humans tend to do what the machine tells them. This is especially true of overworked, overscheduled humans whose bosses penalize discretion. Maplight has documented the money United has spent lobbying against consumer-friendly airline regulation. And so: even though it's incredible to most of us, despite a planeful of protesting passengers, neither the flight crew nor the Chicago Aviation Department stopped the proceedings that led to a person bleeding on the floor, concussed, with two broken front teeth and requiring sinus surgery because he protested like a lot of us would at being told to vacate the seat we'd paid for and been previously authorized to occupy. All backed by a 35,000-word contract of carriage, which United appears to have violated in any case.

It is the job of the programming humans to apply the ethical brakes. But, as has been pointed out, for example by University of Maryland professor Danielle Citron in her 1998 paper Technological Due Process, programmers are bad at that, and on-the-ground humans tend to do what the machine tells them. This is especially true of overworked, overscheduled humans whose bosses penalize discretion. Maplight has documented the money United has spent lobbying against consumer-friendly airline regulation. And so: even though it's incredible to most of us, despite a planeful of protesting passengers, neither the flight crew nor the Chicago Aviation Department stopped the proceedings that led to a person bleeding on the floor, concussed, with two broken front teeth and requiring sinus surgery because he protested like a lot of us would at being told to vacate the seat we'd paid for and been previously authorized to occupy. All backed by a 35,000-word contract of carriage, which United appears to have violated in any case.

Bloomberg recently reported that airlines make more money selling miles than seats. In other words, like Google and Facebook, airlines are now more multi-sided markets and less travel service companies. Even once-a-decade flyers see themselves as customers who should be "always right". Instead, financial reality, combined with post-consolidation lack of competition and post-9/11 suspicion, means they're wrong.

Cue Nicole Gelinas in City Journal, "The customer isn't always right. But an airline that assaults a customer is always wrong." The public largely agrees, so one hopes that what comes out of this is a re-accommodation of values. There's more where this came from.

Illustrations: United jet at Chicago O'Hare; MIT Moral Machine; Danielle Citron.

Wendy M. Grossman is the 2013 winner of the Enigma Award. Her Web site has an extensive archive of her books, articles, and music, and an archive of earlier columns in this series. Stories about the border wars between cyberspace and real life are posted occasionally during the week at the net.wars Pinboard - or follow on Twitter.