A garden of snakes

It's hard to properly enjoy I-told-you-so schadenfreude when you know, from Juan Vargas (D-CA)'s comments this week, that disproportionately the people most affected by the latest cryptocurrency collapse are those who can least afford it. What began as a cultish libertarian desire to bypass the global financial system became a vector for wild speculation, and is now the heart of a series of collapsing frauds.

It's hard to properly enjoy I-told-you-so schadenfreude when you know, from Juan Vargas (D-CA)'s comments this week, that disproportionately the people most affected by the latest cryptocurrency collapse are those who can least afford it. What began as a cultish libertarian desire to bypass the global financial system became a vector for wild speculation, and is now the heart of a series of collapsing frauds.

From the beginning, I've called bitcoin and its sequels as "the currency equivalent of being famous for being famous". Crypto(currency) fans like to claim that the world's fiat currencies don't have any underlying value either, but those are backed by the full faith and credit of governments and economies. Logically, crypto appeals most to those with the least reason to trust their governments: the very rich who resent paying taxes and those who think they have nothing to lose.

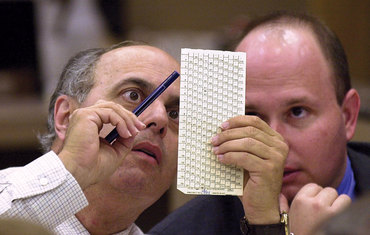

This week the US House and Senate both held hearings on the collapse of cryptocurrency exchange and hedge fund FTX and its deposed, arrested, and charged CEO Sam Bankman-Fried. The key lesson: we can understand the main issues surrounding FTX and its fellow cryptocurrency exchanges without understanding either the technical or financial intricacies.

A key question is whether the problem is FTX or the entire industry. Answers largely split along partisan lines. Republican member chose FTX, and tended to blame Securities and Exchange Commission chair Gary Gensler. Democrats were more likely to condemn the entire industry.

As Jesús G. "Chuy" García (D-IL) put it, "FTX is not an anomaly. It's not just one corrupt guy stealing money, it's an entire industry that refuses to comply with existing regulation that thinks it's above the law." Or, per Brad Sherman (D-CA), "My fear is that we'll view Sam Bankman-Fried as just one big snake in a crypto garden of Eden. The fact is, crypto is a garden of snakes."

When Sherrod Brown (D-OH) asked whether FTX-style fraud existed at other crypto firms, all four expert speakers said yes.

Related is the question of whether and how to regulate crypto, which begins with the problem of deciding whether crypto assets are securities under the decades-old Howey test. In its ongoing suit against Ripple, Gensler's SEC argues for regulation as securities. Lack of regulation has enabled crypto "innovation" - and let it recreate practices long banned in traditional financial markets. For an example see Ben McKenzie's and Jacob Silverman's analysis of leading crypto exchange Binance's endemic conflicts of interest and the extreme risks it allows customers to take that are barred under securities regulations.

Regulation could correct some of this. McKenzie gave the Senate committee numbers: fraudulent financier Bernie Madoff had 37,000 clients; FTX had 32 times that in the US alone. The collective lost funds of the hundreds of millions of victims worldwide could be ten times bigger than Madoff.

But: would regulating crypto clean up the industry or lend it legitimacy it does not deserve? Skeptics ask this about alt-med practitioners.

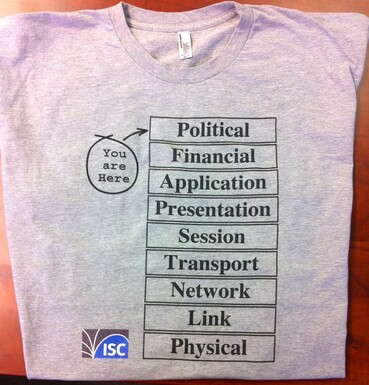

Some background. As software engineer Stephen Diehl explains in his new book, Popping the Crypto Bubble, securities are roughly the opposite of money. What you want from money is stability; sudden changes in value spark cost-of-living crises and economic collapse. For investors, stability is the enemy: they want investments' value to go up. The countervailing risk is why the SEC's requires companies offering securities to publish sufficient truthful information to enable investors to make a reasonable assessment.

In his book, Diehl compares crypto to previous bubbles: the Internet, tulips, the railways, the South Sea. Some, such as the Internet and the railways, cost early investors fortunes but leave behind valuable new infrastructure and technologies on which vast new industries are built. Others, like tulips, leave nothing of new value. Diehl, like other skeptics, believes cryptocurrencies are like tulips.

The idea of digital cash was certainly not new in 2008, when "Satoshi" published their seminal paper on bitcoin; the earliest work is usually attributed to David Chaum, whose 1982 dissertation contained the first known proposal for a blockchain protocol, proposed digital cash in a 1983 paper, and set up a company to commercialize digital cash in 1990 - way too early. Crypto's ethos came from the cypherpunks mailing list, which was founded in 1992 and explored the idea of using cryptography to build a new global financial system.

Diehl connects the reception of Satoshi's paper to its timing, just after the 2007-2008 financial crisis. There's some logic there: many have never recovered.

For a few years in the mid-2010s, a common claim was that cryptocurrencies were bubbles but the blockchain would provide enduring value. Notably disagreeing was Michael Salmony, who startled the 2016 Tomorrow's Transactions Forum by saying the blockchain was a technology in search of a solution. Last week, IBM and Maersk announced they are shutting down their enterprise blockchain because, Dan Robinson writes at The Register, despite the apparently idea use case, they couldn't attract industry collaboration.

More recently we've seen the speculative bubble around NFTs, but otherwise we've heard only about their wildly careening prices in US dollars and the amount of energy mining them consumes. Until this year, when escalating crashes and frauds are taking over. Distrust does not build value.

Illustrations: The Warner Brothers coyote, realizing he's standing on thin air.

Wendy M. Grossman is the 2013 winner of the Enigma Award. Her Web site has an extensive archive of her books, articles, and music, and an archive of earlier columns in this series. Stories about the border wars between cyberspace and real life are posted occasionally during the week at the net.wars Pinboard - or follow on Twitter.

The early months of 2020 were a time of radical uncertainty - that is, decisions had to be made that affected the lives of whole populations where little guidance was available. As

The early months of 2020 were a time of radical uncertainty - that is, decisions had to be made that affected the lives of whole populations where little guidance was available. As  "We talk as if being online is a choice,"

"We talk as if being online is a choice,"  An unexpected bonus of the gradual-then-sudden disappearance of Boris Johnson's government, followed by

An unexpected bonus of the gradual-then-sudden disappearance of Boris Johnson's government, followed by  This week provided two examples of the dangers of believing too much hype about modern-day automated systems and therefore overestimating what they can do.

This week provided two examples of the dangers of believing too much hype about modern-day automated systems and therefore overestimating what they can do. -thumb-370x246-1154.jpg)

_nave_left_-_Monument_to_Doge_Giovanni_Pesaro_-_Statue_of_the_Doge-thumb-370x294-1111.jpg)

I may be reaching the "get off my lawn!" stage of life, except the things I'm yelling at are not harmless children but new technologies, many of which, as

I may be reaching the "get off my lawn!" stage of life, except the things I'm yelling at are not harmless children but new technologies, many of which, as

-thumb-370x388-113.jpg) A tax on small businesses," a disgusted techie called data protection, circa 1993. The Data Protection Directive became EU law in 1995, and came into force in the UK in 1998.

A tax on small businesses," a disgusted techie called data protection, circa 1993. The Data Protection Directive became EU law in 1995, and came into force in the UK in 1998.

"If voting changed anything, they'd abolish it, the maverick British left-wing politician

"If voting changed anything, they'd abolish it, the maverick British left-wing politician

At the Guardian,

At the Guardian,

"My iPhone won't stab me in my bed,"

"My iPhone won't stab me in my bed,"

"Can you keep a record of every key someone enters?"

"Can you keep a record of every key someone enters?"

-thumb-370x288-822.jpg)

-thumb-270x360-798.jpg)

The writers of the sitcom The Big Bang Theory probably thought they were on safe ground in early November when (at a guess) they pegged the price of bitcoin at $5,000 for the episode that had its first airing in the US on November 30 (Season 11, episode 9, "The Bitcoin Entanglement"). By then, it had doubled. This week, it neared $17,500, according to Coindesk. In between, it's dropped as much as 25% in a single day.

The writers of the sitcom The Big Bang Theory probably thought they were on safe ground in early November when (at a guess) they pegged the price of bitcoin at $5,000 for the episode that had its first airing in the US on November 30 (Season 11, episode 9, "The Bitcoin Entanglement"). By then, it had doubled. This week, it neared $17,500, according to Coindesk. In between, it's dropped as much as 25% in a single day. "We were kids working on the new stuff," said Kevin Werbach. "Now it's 20 years later and it still feels like that."

"We were kids working on the new stuff," said Kevin Werbach. "Now it's 20 years later and it still feels like that." Both

Both  On

On

-thumb-220x247-646.jpg)