Creepiness at scale

This week, Amazon announced a prospective new feature for its Alexa "smart" speakers: the ability to mimic anyone;s voice from less than on minute of recording. Amazon is, incredibly, billing this as the chance to memorialize a dead loved one as a digital assistant.

This week, Amazon announced a prospective new feature for its Alexa "smart" speakers: the ability to mimic anyone;s voice from less than on minute of recording. Amazon is, incredibly, billing this as the chance to memorialize a dead loved one as a digital assistant.

As someone commented on Twitter, technology companies are not *supposed* to make ideas from science fiction dystopias into reality. As so often, Philip K. Dick got here first; in his 1969 novel Ubik, a combination of psychic powers and cryonics lets (rich) people visit and consult their dead, whose half-life fades with each contact.

Amazon can call this preserving "memories", but at The Overspill Charles Arthur is likely closer to reality, calling it "deepfake for voice". Except that were deepfakes emerged from a Reddit group and requires some technical effort, Amazon's functionality will be right there in millions of people's homes, planted by one of the world's largest technology companies. Questions abound: who gets access to the data and models, and will Amazon link it to its Ring doorbell network and thousands of partnerships with law enforcement?

The answers, like the service, are probably years off. The lawsuits may not be.

This piece began as some notes on the company that so far has been the technology industry's creepiest: the facial image database company Clearview AI. Clearview, which has built its multibillion-item database by scraping images off social media and other publicly accessible sites, has fallen foul of regulators in the UK, Australia, France, Italy, Canada, and Illinois. In a world full of intrusive companies collecting mass amounts of personal data about all of us, Clearview AI still stands out.

It has few, if any, defenders outside its own offices. For one thing, unlike Facebook or Google, it offers us - citizens, consumers - nothing in return for our data, which it appropriates wholesale. It is the ultimate two-sided market in which we are nothing but salable data points. It came to public notice in January 2020, when Kashmir Hill exposed its existence and asked if this was the company that was going to end privacy.

Clearview, which bills itself as "building a secure world one face at a time", defends itself against both data protection and copyright laws by arguing that scraping and storing billions of images from what law enforcement likes to call "open source intelligence" is legitimate because the images are posted in public. Even if that were how data protection laws work, it's not how copyright works! Both Twitter and Facebook told Clearview to stop scraping their sites shortly after Hill's article appeared in 2020, as did Google, LInkedIn, and YouTube. It's not clear if the company stopped or deleted any of the data.

Among regulators, Canada was first, starting federal and provincial investigations in June 2020, when Clearview claimed its database held 3 billion images. In February 2021, the Canadian Privacy Commissioner, David Therrien, issued a public warning that the company could not use facial images of Canadians without their explicit consent. Clearview, which had been selling its service to the Royal Canadian Mounted Police among dozens of others, opted to leave the country and mount a court challenge - but not to delete images of Canadians, as Therrien had requested.

In December 2021, the French data protection authority, CNIL, ordered Clearview to delete all the data it holds relating to French citizens within two months, and threatened further sanctions and administrative fines if the company failed to comply within that time.

In March 2022, with Clearview openly targeting 100 billion images and commercial users, Italian DPA Garante per la protezione dei dati personali fined Clearview €20 million, ordered it to delete any data it holds on Italians, and banned it from further processing of Italian citizens' biometrics.

In May 2022, the UK's Information Commissioner's Office fined the company £7.5 million and ordered it to delete the UK data it holds.

All these cases are based on GDPR and find the same complaints: Clearview has no legal basis for holding the data, and it is in breach of data retention rules and subjects' rights. Clearview appears not to care, taking the view that it is not subject to GDPR because it's not a European company.

It couldn't make that argument to the state of Illinois. In early May 2022, Clearview and the American Civil Liberties Union settled a court action filed in May 2020 under Illinois' Biometric Information Privacy Act. Result: Clearview has accepted a ban on selling its services or offering them for free to most private companies *nationwide* and a ban on selling access to its database to any private or state or local government entity, including law enforcement, in Illinois for five years. Clearview has also developed an opt-out form for Illinois residents to use to withdraw their photos from searches, and continue to try to filter out photographs taken in or uploaded from Illinois. On its website, Clearview paints all this as a win.

Eleven years ago, Google's then-CEO, Eric Schmidt, thought automating facial recognition was too creepy to pursue and synthesizing a voice from recordings took months. The problem isn't any more that potentially dangerous technology has developed faster than laws can be formulated to control it. It's that we now have well-funded companies that don't care about either.

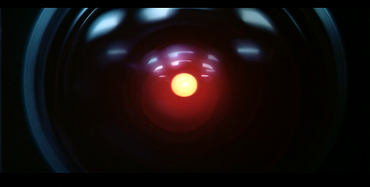

Illustrations: HAL, from 2001: A Space Odyssey.

Wendy M. Grossman is the 2013 winner of the Enigma Award. Her Web site has an extensive archive of her books, articles, and music, and an archive of earlier columns in this series. Stories about the border wars between cyberspace and real life are posted occasionally during the week at the net.wars Pinboard - or follow on Twitter.