Curating the curators

One of the longest-running conflicts on the Internet surrounds whether and what restrictions should be applied to the content people post. These days, those rules are known as "platform governance", and this week saw the first conference by that name. In the background, three of the big four CEOs returned to Congress for more questioning, the EU is planning the Digital Services Act; the US looks serious about antitrust action, and debate about revising Section 230 of the Communications Decency Act continues even though few understandwhat it does; and the UK continues to push "online harms.

One of the longest-running conflicts on the Internet surrounds whether and what restrictions should be applied to the content people post. These days, those rules are known as "platform governance", and this week saw the first conference by that name. In the background, three of the big four CEOs returned to Congress for more questioning, the EU is planning the Digital Services Act; the US looks serious about antitrust action, and debate about revising Section 230 of the Communications Decency Act continues even though few understandwhat it does; and the UK continues to push "online harms.

The most interesting thing about the Platform Governance conference is how narrow it makes those debates look. The second-most interesting thing: it was not a law conference!

For one thing, which platforms? Twitter may be the most-studied, partly because journalists and academics use it themselves and data is more available; YouTube, Facebook, and subsidiaries WhatsApp and Instagram are the most complained-about. The discussion here included not only those three but less "platformy" things like Reddit, Tumblr, Amazon's livestreaming subsidiary Twitch, games, Roblox, India's ShareChat, labor platforms UpWork and Fiverr, edX, and even VPN apps. It's unlikely that the problems of Facebook, YouTube, and Twitter that governments obsess over are limited to them; they're just the most visible and, especially, the most *here*. Granting differences in local culture, business model, purpose, and platform design, human behavior doesn't vary that much.

For example, Jenny Domino reminded - again - that the behaviors now sparking debates in the West are not new or unique to this part of the world. What most agree *almost* happened in the US on January 6 *actually* happened in Myanmar with far less scrutiny despite a 2018 UN fact-finding mission that highlighted Facebook's role in spreading hate. We've heard this sort of story before, regarding Cambridge Analytica. In Myanmar and, as Sandeep Mertia said, India, the Internet of the 1990s never existed. Facebook is the only "Internet". Mertia's "next billion users" won't use email or the web; they'll go straight to WhatsApp or a local or newer equivalent, and stay there.

Mehitabel Glenhaber, whose focus was Twitch, used it to illustrate another way our usual discussions are too limited: "Moderation can escape all up and down the stack," she said. Near the bottom of the "stack" of layers of service, after the January 6 Capitol invasion Amazon denied hosting services to the right-wing chat app Parler; higher up the stack, Apple and Google removed Parler's app from their app stores. On Twitch, Glenhaber found a conflict between the site's moderatorial decision the handling of that decision by two browser extensions that replace text with graphics, one of which honored the site's ruling and one of which overturned it. I had never thought of ad blockers as content moderators before, but of course they are, and few of us examine them in detail.

Separately, in a recent lecture on the impact of low-cost technical infrastructure, Cambridge security engineer Ross Anderson also brought up the importance of the power to exclude. Most often, he said, social exclusion matters more than technical; taking out a scammer's email address and disrupting all their social network is more effective than taking down their more easily-replaced website. If we look at misinformation as a form of cybersecurity challenge - as we should, that's an important principle.

One recurring frustration is our general lack of access to the insider view of what's actually happening. Alice Marwick is finding from interviews that members of Trust and Safety teams at various companies have a better and broader view of online abuse than even those who experience it. Their data suggests that rather than being gender-specific harassment affects all groups of people; in niche groups the forms disagreements take can be obscure to outsiders. Most important, each platform's affordances are different; you cannot generalize from a peer-to-peer site like Facebook or Twitter to Twitch or YouTube, where the site's relationships are less equal and more creator-fan.

A final limitation in how we think about platforms and abuse is that the options are so limited: a user is banned or not, content stays up or is taken down. We never think, Sarita Schoenebeck said, about other mechanisms or alternatives to criminal justice such as reparative or restorative justice. "Who has been harmed?" she asked. "What do they need? Whose obligation is it to meet that need?" And, she added later, who is in power in platform governance, and what harms have they overlooked and how?

In considering that sort of issue, Bharath Ganesh found three separate logics in his tour through platform racism and the governance of extremism: platform, social media, and free speech. Mark Zuckerberg offers a prime example of the latter, the Silicon Valley libertarian insistence that the marketplace of ideas will solve any problems and that sees the First Amendment freedom of expression as an absolute right, not one that must be balanced against others - such as "freedom from fear". Following the end of the conference by watching the end of yesterday's Congressional hearings, you couldn't help thinking about that as Mark Zuckerberg embarked on yet another pile of self-serving "Congressman..." rather than the simple "yes or no" he was asked to deliver.

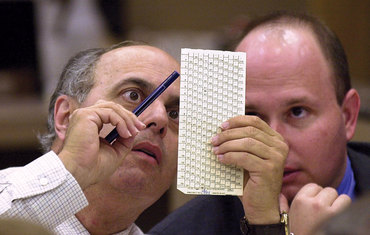

Illustrations: Mark Zuckerberg, testifying in Congress on March 25, 2021.

Wendy M. Grossman is the 2013 winner of the Enigma Award. Her Web site has an extensive archive of her books, articles, and music, and an archive of earlier columns in this series. Stories about the border wars between cyberspace and real life are posted occasionally during the week at the net.wars Pinboard - or follow on Twitter.

The clowder of legislation to restrict voting access that's popping up across the US is casting the last 20 years of debate over online voting in a new light.

The clowder of legislation to restrict voting access that's popping up across the US is casting the last 20 years of debate over online voting in a new light.