Layer nine

Is it possible to regulate the internet without killing it?

Is it possible to regulate the internet without killing it?

Before you can answer that you have to answer this: what constitutes killing the Internet? The Internet Society has a sort of answer, which is a list of what it calls Internet invariants, a useful phrase that is less attackable as "solutionism" by Evgeny Morozov than alternatives that portray the Internet as if it were a force of nature instead of human-designed and human-made.

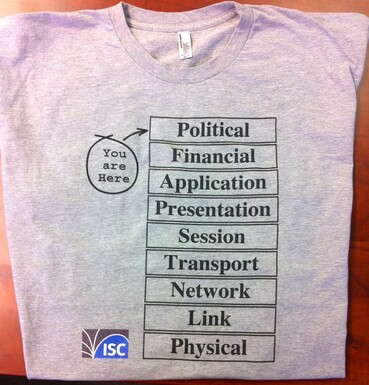

Few people watching video on their phones on the Underground care about this, but networking specialists view the Internet as a set of layers. I don't know the whole story, but in the 1980s researchers, particularly in Europe, put a lot of work into conceptualizing a seven-layer networking model, Open Systems Interconnection. By 1991, however, a company CEO told me, "I don't know why we need it. TCP/IP is here now. Why can't we just use that?" TCP/IP are the Internet protocols, so that conversation showed the future. However, people still use the concepts OSI built. The bottom, physical layers, are the province of ISPs and telcos. The ones the Internet Society is concerned about are the ones concerning infrastructure and protocols - the middle layers. Layer 7, "Application", is all the things users see - and politicians fight over.

We are at a layer the OSI model failed to recognize, identified by the engineer Evi Nemeth. We - digital and human rights activists, regulators, policy makers, social scientists, net.wars readers - are at layer 9.

So the question we started with might also be phrased, "Is it possible to regulate the application layer while leaving the underlying infrastructure undamaged?" Put like that, it feels like it ought to be. Yet aspects of Internet regulation definitely entangle downwards. Most are surveillance-related, such as the US requirement that ISPs enable interception and data retention. Emerging demands for localized data storage and the General Data Protection Regulation also may penetrate more deeply while raising issues of extraterritorial jurisdiction. GDPR seeds itself into other countries like the stowaway recursive clause of the GNU General Public License for software: both require their application to onward derivatives. Localized data storage demands blocks and firewalls instead of openness.

Twenty years ago, you could make this pitch to policy makers: if you break the openness of the Internet by requiring a license to start an online business, or implementing a firewall, or limiting what people can say and do, you will be excluded form the Internet's economic and social benefits. Since then, China has proved that a national intranet can still fuel big businesses. Meanwhile, the retail sector craters and a new Facebook malfeasance surfaces near-daily, the policy maker might respond that the FAANG- Fab Five pay far less in tax than the companies they've put out of business, employment precarity is increasing, and the FAANGs wield disproportionate power while enabling abusive behavior and the spread of extremism and violence. We had open innovation and this is what it brought us.

To old-timers this is all kinds of confusion. As I said recently on Twitter, it's subsets all the way down: Facebook is a site on the web, and the web is an application that runs on the Internet. They are not equivalents. Here. In countries where Facebook's Free Basics is zero-rated, the two are functionally equivalent.

Somewhere in the midst of a discussion yesterday about all this, it was interesting to consider airline safety. That industry understood very early that safety was crucial to its success. Within 20 years of the Wright Brothers' first flight in 1903, the nascent industry was lobbying the US Congress for regulation; the first airline safety bill passed in 1926. If the airline industry had instead been founded by the sort of libertarians who have dominated large parts of Internet development...well, the old joke about the exchange between General Motors and Bill Gates applies. The computer industry has gotten away with refusing responsibility for 40 years because they do not believe we'll ever stop buying their products, and we let it.

There's a lot to say about the threat of regulatory capture even in two highly regulated industries, medicine and air travel, and maybe we'll say it here one week soon, but the overall point is that outside of the open source community, most stakeholders in today's Internet lack the kind of overarching common goal that continues to lead airlines and airplane manufacturers to collaborate on safety despite also being fierce competitors. The computer industry, by contrast, has spent the last 50 years mocking government for being too slow to keep up with technological change while actively refusing to accept any product liability for software.

In our present context, the "Internet invariants" seem almost quaint. Yet I hope the Internet Society succeeds in protecting the Internet's openness because I don't believe our present situation means that the open Internet has failed. Instead, the toxic combination of neoliberalism, techno-arrogance, and the refusal of responsibility (by many industries - just today, see pharma and oil) has undermined the social compact the open Internet reflected. Regulation is not the enemy. *Badly-conceived* regulation is. So the question of what good regulation looks like is crucial.

Illustrations: Evi Nemeth's adapted OSI model, seen here on a T-shirt historically sold by the Internet Systems Consortium.

Wendy M. Grossman is the 2013 winner of the Enigma Award. Her Web site has an extensive archive of her books, articles, and music, and an archive of earlier columns in this series. Stories about the border wars between cyberspace and real life are posted occasionally during the week at the net.wars Pinboard - or follow on Twitter.